On 2024/05/09 (JST), Stability AI released a new Japanese large language model, “Japanese Stable LM 2 1.6B”.

This LLM is both small in size and high in performance, and it seems to operate well in relatively simple environments. In this article, we confirm that the latest sample can be experimented with very short code on Google Colab, which does not require any special environment.

First, from the official release by Stability AI

https://ja.stability.ai/blog/japanese-stable-lm-2-16b

https://x.com/StabilityAI_JP/status/1788438857320681756

- The Japanese Stable LM 2 1.6B (JSLM2 1.6B) is a small language model trained with 1.6 billion parameters.

- By reducing the model size to 1.6 billion parameters, the required hardware can be kept small, allowing more developers to participate in the ecosystem of Creative AI.

- As base models, we offer the Japanese Stable LM 2 Base 1.6B and the instruction-tuned Japanese Stable LM 2 Instruct 1.6B. Both models can be commercially used with a Stability AI membership and are available for download from Hugging Face.

Stability AI Japan has released both the base model and the instruction-tuned model of the Japanese language model Japanese Stable LM 2 1.6B (JSLM2 1.6B), trained with 1.6 billion parameters.

The base model was trained using language data from sources such as Wikipedia and CulturaX, while the instruction tuning used commercial and public data including Japanese translations of jaster, Ichikara-Instruction, and Ultra Orca Boros v1. The JSLM2 1.6B utilizes the latest algorithms in language modeling, allowing for rapid experimentation with moderate hardware resources, balancing speed and performance.

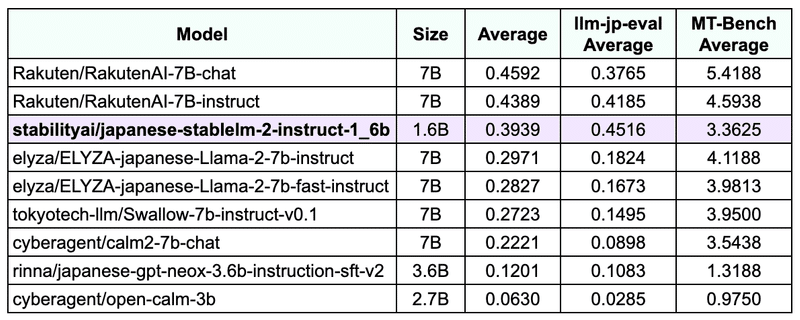

Performance Evaluation

Using the Nejumi leaderboard, we compared the performance of JSLM2 1.6B with other models of similar parameter sizes. This time, we used commit c46e165 from the internal fork of the llm-leaderboard.

Despite being a small model with 1.6 billion parameters, it achieved higher scores than models with up to 4 billion parameters and scores close to those of 7 billion parameter models.

Releasing a high-performance, small language model lowers the barriers to language model development, enabling faster experimental iterations. However, due to the smaller number of parameters, this model may be more prone to hallucinations or errors compared to larger models. Please take appropriate precautions when using it in applications. We hope that the release of JSLM2 1.6B will contribute to the further development and advancement of Japanese LLMs.

Commercial Use

JSLM2 1.6B is one of the models provided with the Stability AI membership. If you want to use it commercially, please register on the Stability AI membership page and self-host.

Check the latest information from Stability AI on their official X and Instagram.

(End of official release information)

Using it on Google Colab

Let’s experience it on Google Colab right away.

Follow the official sample code to create a new project on Google Drive and build it with a Google Colab Notebook.

(Links to fully functional code are introduced at the end of this article)

Google Colab allows you to experiment without worrying about GPU or memory size, making it an easy environment for learning.

Here is the official sample code:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

model_name = "stabilityai/japanese-stablelm-2-instruct-1_6b"

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

# The next line may need to be modified depending on the environment

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype=torch.float16,

low_cpu_mem_usage=True,

device_map="auto",

trust_remote_code=True,

)

prompt = [

{"role": "system", "content": "あなたは役立つアシスタントです。"},

{"role": "user", "content": "「情けは人のためならず」ということわざの意味を小学生でも分かるように教えてください。"},

]

inputs = tokenizer.apply_chat_template(

prompt,

add_generation_prompt=True,

return_tensors="pt",

).to(model.device)

# this is for reproducibility.

# feel free to change to get different result

seed = 23

torch.manual_seed(seed)

tokens = model.generate(

inputs,

max_new_tokens=128,

temperature=0.99,

top_p=0.95,

do_sample=True,

)

out = tokenizer.decode(tokens[0], skip_special_tokens=False)

print(out)Applying for Repository Access

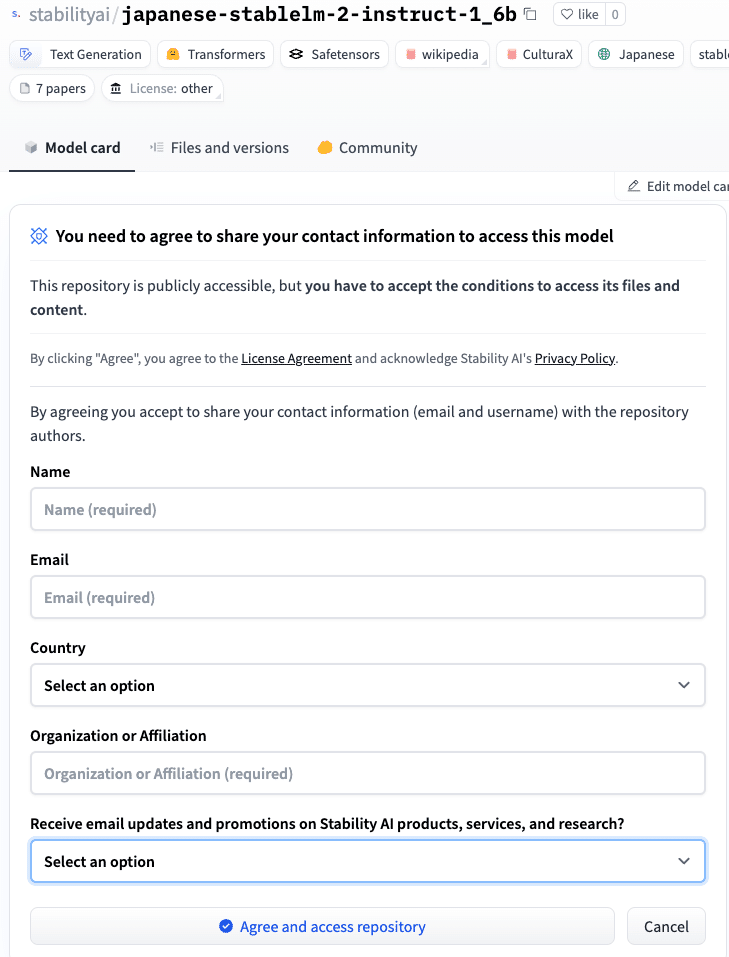

First, create an account on Hugging Face, apply for access to the Stability AI repository from the model card, and answer the following questions.

The key point is to enter your email address at the end to receive the email newsletter.

✨️ Note that AICU is a commercial member of Stability AI ✨️

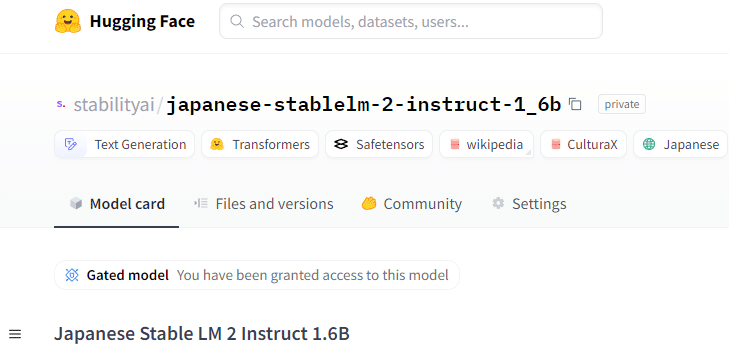

Once your access application is approved, the form will not be displayed, and “Gated model” will be shown.

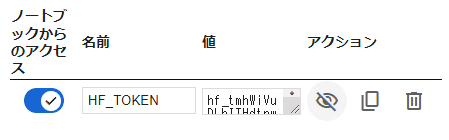

Google Colab Secret Feature

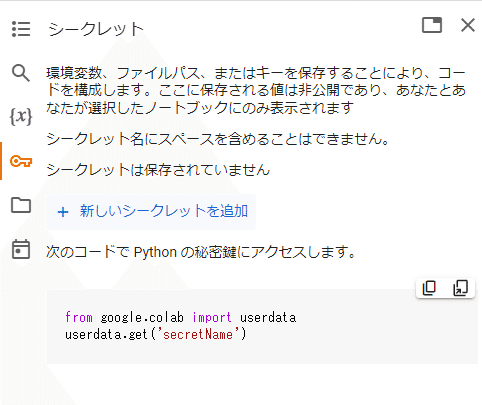

Recently, a feature was implemented in Google Colab and Hugging Face, allowing you to specify an environment variable called “HF_TOKEN” from the “Secrets” on the left side.

By storing environment variables, file paths, or keys, you can configure your code. The values stored here are private and only visible to the user and the selected notebook.

Press “+ Add new secret”

At the same time, create a token in Hugging Face under “Settings” > “Access Tokens”.

https://huggingface.co/settings/tokens

Name it “Colab-HF_TOKEN” and set the permission to “WRITE” (it will work with READ only, but Hugging Face recommends WRITE).

Paste the obtained user access token (the string starting with hf_) into the “Value” field of “HF_TOKEN”. Turn on “Access from notebook”.

Now, you can refer to the Hugging Face token simply by writing “token = userdata.get(‘HF_TOKEN’)”.

Of course, if you prefer to write it directly in the Python script, you can use your Hugging Face token. Alternatively, you can use the command `!huggingface-cli login — token $token`, and it should work as well!

Running the Script

Here is the code.

吾輩は猫である、名前は…

Setting `pad_token_id` to `eos_token_id`:100257 for open-end generation.

吾輩は猫である、名前は…まだない。 そもそも「名前を付ける必要があるのか?」 それを考えるのに、10歳を目前にしている吾輩である。 しかし、他の猫達との差別化という意味では、あった方が良いとも思うし、なんせ名前があるという安心感から、猫は猫らしく、自由気ままにやりたい

Colab allows you to choose the execution environment, and running on a CPU generates results in less than a minute.

吾輩は猫である、名前は…名無しである。 名前はない。 何故なら、吾輩は名前を言いたくないのだ。 それを、そのことを知っている奴らが「名無し」と呼ぶのである。 そいつらを、「名付き」の奴らと呼ぼう。 吾輩が名無しと呼ばれるのは「何も持っていないから」である。 何も持っていないから、それ

吾輩は猫である、名前は… といえるほど、名前が思い浮かばないのは、私だけでしょうか? そうは言っても、「猫じゃらし」は、その場によって名前のバリエーションがたくさんありますよね。 そういう「猫じゃらし」が持つ、イメージとか、意外な性格とか、飼い猫のネコじゃらしの場合を

吾輩は猫である、名前は…まだないのである。 ここは、吾輩が住み慣れた部屋、何も特徴のないところだ。 場所は不確かだが、とにかく部屋だけはここになって、ずっとこの部屋で暮らしているのだ。 なんでこんなことを言っているかというと、吾輩の部屋が消えて、別…

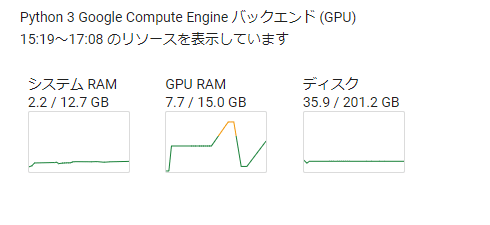

Next, we tried running it on a T4 GPU.

The following text was generated in 4–5 seconds.

If “Do you wish to run the custom code? [y/N]” appears, press y and hit Enter (this may depend on the environment).

The GPU memory (GPU RAM/VRAM) is 7.7GB, which seems to work even with the less than 8GB standard found in many gaming PCs.

The warning “Setting pad_token_id to eos_token_id:100257 for open-end generation.” may appear, but it seems good to make actual settings while referring to this information.

Try the Instruct Version with GUI for Chatbots!

At the same time, our advocate colleague DELL has published the Google Colab version code of Japanese Stable LM 2 Instruct 1.6B.

You can enjoy Japanese chat using the Gradio interface!

Takeaways

In summary, we wrote a short code with the secret feature of Google Colab for the latest Japanese large language model “Japanese Stable LM 2 1.6B” released by Stability AI.

The Google Colab environment also benefits from having Transformers pre-installed, among other features like the secret function. This makes it a better option than trying it directly in a local environment right away!

At AICU media, we aim to increase experimental topics on Japanese LLMs. We would appreciate your feedback, shares, and comments on X (Twitter).

We are also continuously recruiting aspiring writers and student interns to test their skills.

Originally published at https://ja.aicu.ai on May 10, 2024.

Comments